1. Introduction

In this tutorial, we’ll discuss the asymptotic properties of .

An example of an algorithm whose time complexity corresponds to this function is binary search over permutations of an array.

2. Complexity

We define the complexity class of an algorithm by analyzing how the number of steps it performs increases with the input size.

Technically, a step in an algorithm can be any operation it performs. Although we can focus only on specific ones, such as arithmetic or IO operations, we usually assume that all atomic operations are of the same order, so we count them all. That way, we get a non-negative function mapping an integer

to the number of steps our algorithm performs given the input of size

.

The asymptotic notation distinguishes between several cases.

Big O denotes asymptotic upper bounds. We say that is an asymptotic upper bound of

(denoted as

) if there’s a constant

and an input size

such that for all larger inputs

, we have

.

Big Omega defines asymptotic lower bounds. We say that is an asymptotic lower bound of

(and write

) if there’s a constant

and an input size

such that for all larger inputs

, we have

.

Finally, we say that is of the same order as

(and write

) if

is asymptotically bounded by

from below and above at the same time:

3. The Upper Bound

Let’s note that for every input size , it holds:

From there:

Taking the logarithms of both sides, we get:

To formally prove that , we need to show that there exists

and

such that:

Our derivation shows for every

, so

and

.

3.1. Does the Base of the Logarithm Matter?

Since the logarithmic function is strictly increasing when the base is greater than 1, taking the logarithms of both sides of will preserve the relation

if we use a base

.

These are bases we consider in algorithmic analysis, so we don’t have to be concerned with a fractional base.

4. The Lower Bound

First, let’s note that for any , we have:

Now, let’s use the fact that all these numbers are greater than or equal to

:

Since , we have:

Therefore:

Let’s take logarithms:

Setting and

, we get that:

The proof was longer than for because, to make it rigorous, we had to use

. As is the case for Big O, the base of the logarithm doesn’t matter.

5. The Asymptotic Order and Equivalence

Since we have:

we can conclude that . So,

is of the same order as

.

5.1. Asymptotic Equivalence

However, are these two functions asymptotically equivalent? The relation of asymptotic equivalence is similar to

but has a stronger condition:

For , we must have

starting from some

. For

, it should hold that

and

are approximately the same in the limit. In other words:

5.2. Is log n! ~ n log n?

Let’s compute the limit:

Since every , we can substitute

for

:

This way, we get the upper bound.

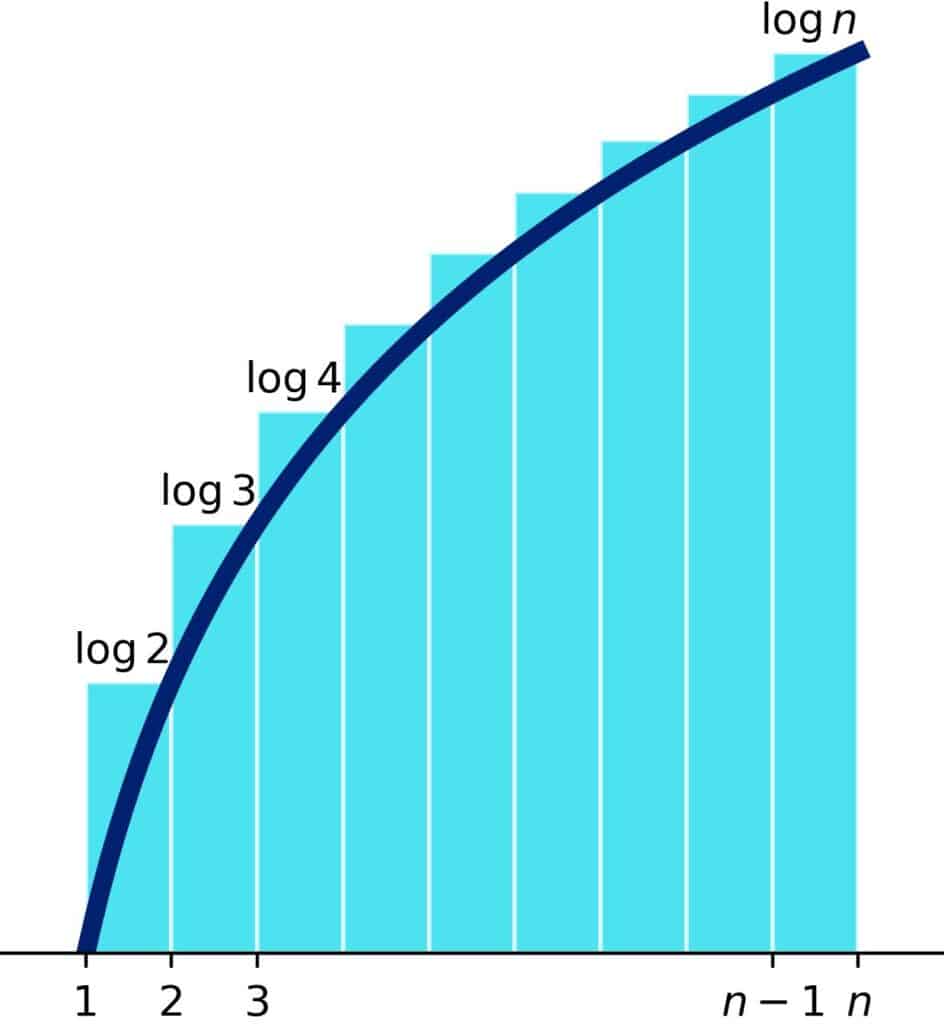

To compute the lower bound, let’s inspect the following plot:

The shaded area equals the sum . It overestimates the area under

between

and

:

Since , we can add it to both sides to get:

For any base , we can integrate by parts to get:

So:

As , the lower bound approaches 1. Therefore, we have:

That means that the limit is also one:

So, it also holds that .

6. Stirling’s Approximation

Stirling’s approximation of (and

) can be derived by approximating the integral of

over

. The formula is:

This means that the error of approximating with

is

, i.e., it’s asymptotically bounded by

. Since the base doesn’t affect the asymptotic behavior, we have:

It can be shown that this approximation can be restated as:

Stirling’s approximation immediately reveals the asymptotic relation between and

, but its proof deals with mathematical details that are out of the scope of this article.

7. Conclusion

In this article, we analyzed the asymptotic properties of . This function determines the complexity of algorithms searching permutation spaces similarly to binary search.

The function is of the same asymptotic order as and is asymptotically equivalent to

.