1. Introduction

In this tutorial, we’ll discuss two critical grammar concepts in compiler design: left recursion and left factoring. We’ll explore their core differences and explain how to optimize parsing performance.

2. Left Recursion

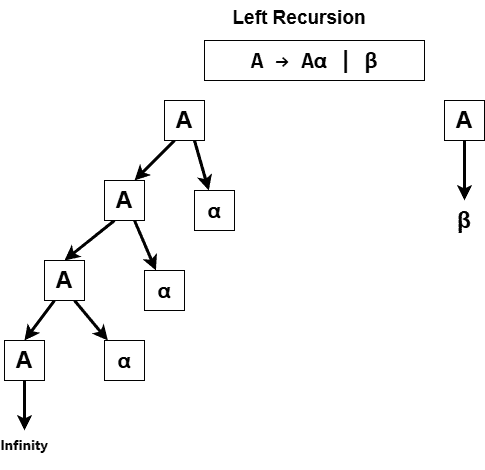

Left recursion occurs when a non-terminal symbol in a grammar rule refers to itself as the leftmost symbol in its production:

Here, A is a non-terminal, is a non-empty string, whereas

is a string that can be empty.

Furthermore, left recursion can be direct, as illustrated above, or indirect, where a non-terminal eventually refers back to itself through a chain of productions. For example:

This recursion is indirect because A depends on B, which depends on A.

2.1. Why Is Left Recursion a Problem?

When some parsers attempt to parse input based on a left-recursive rule, such as , they can get stuck in an infinite loop, repeatedly trying to match A without ever reaching

. This problem is common in top-down parsers (like recursive descent parsers), which expand rules starting from the left side:

This is the case with top-down parsers, such as LL parsers. These parsers try to predict which rule to apply based on the next input token, moving from left to right. However, they can’t do that with a left-recursive rule, as they keep “recursing” without progressing through the input string. As a result, we get an infinite loop in the parser.

For instance, let’s say we have a left-recursive rule for parsing a list of terms separated by commas:

With this rule, the parser starts by trying to match List. Since it also sees List on the right-hand side, it tries to match it again, creating a never-ending cycle.

2.2. How to Eliminate Left Recursion?

We need to eliminate left recursion to make a grammar compatible with LL parsing. The general approach involves restructuring the rules so that recursion happens at the end of a production rather than at the beginning.

For instance, for a rule such as:

the trick is to replace it with:

This new setup uses a helper non-terminal, A′, which lets us handle the recursion “from the right” instead of directly looping back to A (and denotes the empty string).

For example, suppose the input is cdd, and the grammar before elimination is:

Using the left-recursive rule, a top-down parser would attempt:

But after eliminating left recursion, the grammar becomes:

For our previous input cdd, parsing now proceeds as:

Therefore, the transformation ensures the input cdd is successfully parsed, avoiding infinite recursion and making the grammar suitable for LL parsers.

3. Left Factoring

Left factoring is a technique that simplifies grammar rules to make parsing more efficient and less error-prone. This approach is especially helpful for top-down parsers, which can run into trouble when faced with ambiguous or left-recursive grammars.

The basic idea behind left factoring is to rewrite the rules that share beginnings (prefixes) so that the parser doesn’t need to backtrack or guess which rule to apply.

3.1. Why Do We Use Left Factoring?

Top-down parsers process input by scanning symbols from left to right and matching them against the grammar rules. If two or more rules start with the same symbols, the parser can’t immediately decide which one to follow. This results in backtracking or even parsing errors, making the process less efficient.

Left factoring removes this ambiguity by isolating the common parts of rules, reducing the need for the parser to backtrack. With left factoring, we get a clear, step-by-step process that the parser can follow smoothly.

Let’s look at this grammar that’s not left-factored:

In this example, both rules for A start with the symbol . Without left factoring, the parser has to guess or backtrack after reading

, which can lead to parsing issues.

To apply left factoring, we rewrite this rule to group the common prefix :

Now, the parser matches and then decides between

and

based on what follows. This way, the parser no longer needs to backtrack, so parsing is smoother.

3.2. Example

Let’s consider the input ab and the following grammar that’s not left-factored:

For the input ab, a top-down parser without left factoring faces the following challenges:

- After reading a (

), it cannot immediately determine whether to follow

or

because both start with a

- The parser must backtrack or use lookahead to resolve the ambiguity, complicating the parsing

By applying left factoring, we rewrite the grammar as follows:

Now, parsing the input ab is straightforward:

This process is direct and eliminates the need for backtracking. The parser first matches the common prefix a and then distinguishes between and

based on what follows, ensuring efficient parsing.

In general, left factoring reduces ambiguity, eliminates the need for backtracking, and simplifies the implementation of LL parsers.

4. Key Differences Between Left Factoring and Left Recursion

Left factoring and left recursion are distinct concepts in grammar design with different impacts on parser efficiency and compatibility:

Aspect

Left Recursion

Left Factoring

Definition

Non-terminal appears as the leftmost symbol in its production.

Eliminates common prefixes in productions.

Purpose

Expresses repetitive structures.

Reduces ambiguity and improves parser efficiency.

Impact on Grammar

It makes grammar unsuitable for top-down parsers.

Introduces new non-terminals for common prefixes.

Parser Efficiency

Causes infinite loops in top-down parsers.

Improves efficiency by reducing choices.

Ambiguity Resolution

Introduces ambiguity if not handled properly.

Helps resolve ambiguity.

Compatibility

Incompatible with top-down parsers and requires elimination.

Compatible with both top-down and bottom-up parsers.

Left factoring improves grammar clarity and efficiency, while left recursion can cause issues for top-down parsers.

5. Conclusion

In this article, we discussed two important concepts in the design of formal grammars: left recursion and left factoring. Left factoring is a technique used to eliminate common prefixes in productions, improving grammar clarity and parser efficiency. It’s compatible with both top-down and bottom-up parsers and helps resolve ambiguity.

On the other hand, left recursion, where a non-terminal appears as the leftmost symbol in its production, can express repetitive structures but causes issues for top-down parsers by potentially leading to infinite loops. While left recursion is generally incompatible with top-down parsers, it can be handled by bottom-up parsers without modification.