1. Introduction

Artificial Intelligence (AI) has made remarkable advances in recent years, with deep learning models achieving noteworthy success in various domains. However, these models often function as “black boxes,” making it difficult to understand their reasoning. Conversely, symbolic AI follows predefined rules and excels at logical reasoning but doesn’t learn from data.

Neurosymbolic AI bridges this gap by combining neural networks for learning (pattern recognition) with symbolic AI for logical reasoning. This hybrid approach allows AI to learn from data while ensuring structured, explainable decision-making.

In this tutorial, we’ll explore Neurosymbolic AI, how it works, its benefits and challenges, and some future directions in this field.

2. Overview

Let’s say we have a deep network that can detect disease patterns. However, without symbolic reasoning, it may misinterpret symptoms or recommend inconsistent treatments.

For example, a neural model might suggest antibiotics for the flu based on symptom patterns, even though antibiotics don’t treat viral infections. A symbolic rule, such as “don’t prescribe antibiotics for viral diagnoses,” can prevent such errors by incorporating medical knowledge and guidelines.

This hybrid approach is beneficial in fields where trust and interpretability are essential. In particular, Neurosymbolic AI is valuable in healthcare, finance, autonomous systems, and scientific research.

3. The Two Pillars of Neurosymbolic AI

To understand how Neurosymbolic AI works, we must explore its two fundamental components: Neural Networks and Symbolic AI.

3.1. Neural Networks (Connectionist AI)

Neural networks, often referred to as connectionist AI, are inspired by the structure and function of the human brain. These models comprise layers of interconnected nodes (neurons) that process and transform data to learn patterns and make predictions.

Neural networks’ strengths include their ability to detect complex patterns in images, text, and speech and their adaptability to unseen data. However, their weaknesses are that they often function as black boxes, making it difficult to understand their decisions. Moreover, they struggle with explicit rules, structured reasoning, and symbolic manipulation and require large amounts of labeled data to train effectively.

A good example of a neural network’s limitation is an image classifier that can recognize objects in a photo but can’t explain why an object belongs to a specific category. This lack of reasoning makes it challenging to trust neural networks in critical applications like medicine or law.

3.2. Symbolic AI (Rule-Based Systems)

Symbolic AI, also known as Good Old-Fashioned AI (GOFAI), relies on explicit rules, logic, and structured representations to perform reasoning and decision-making. Instead of learning from data, symbolic systems use if-then rules, logic programming, and ontologies to represent and manipulate knowledge.

The strengths of Symbolic AI include explainability, as decisions are based on transparent and interpretable logic rules. It also features logical reasoning, allowing it to perform deduction, inference, and planning with well-defined constraints. Additionally, Symbolic AI is data-efficient; it doesn’t require large datasets and works well with domain knowledge provided by humans.

However, Symbolic AI lacks adaptability, struggles with uncertainty, and generalizes poorly to unstructured data. Furthermore, it has limited learning ability, requiring manual updates instead of improving from experience like neural networks.

For example, a symbolic AI system for medical diagnosis might use predefined rules like: “If a patient has a fever, sore throat, and fatigue, they may have a viral infection.” While this is useful, the system of rules is predefined. It can’t learn new medical conditions on its own or adapt to patient variations unless a human updates the rule set.

In the next section, we’ll explore how these two paradigms can be integrated to form a more intelligent and human-like AI.

4. How Neurosymbolic AI Works

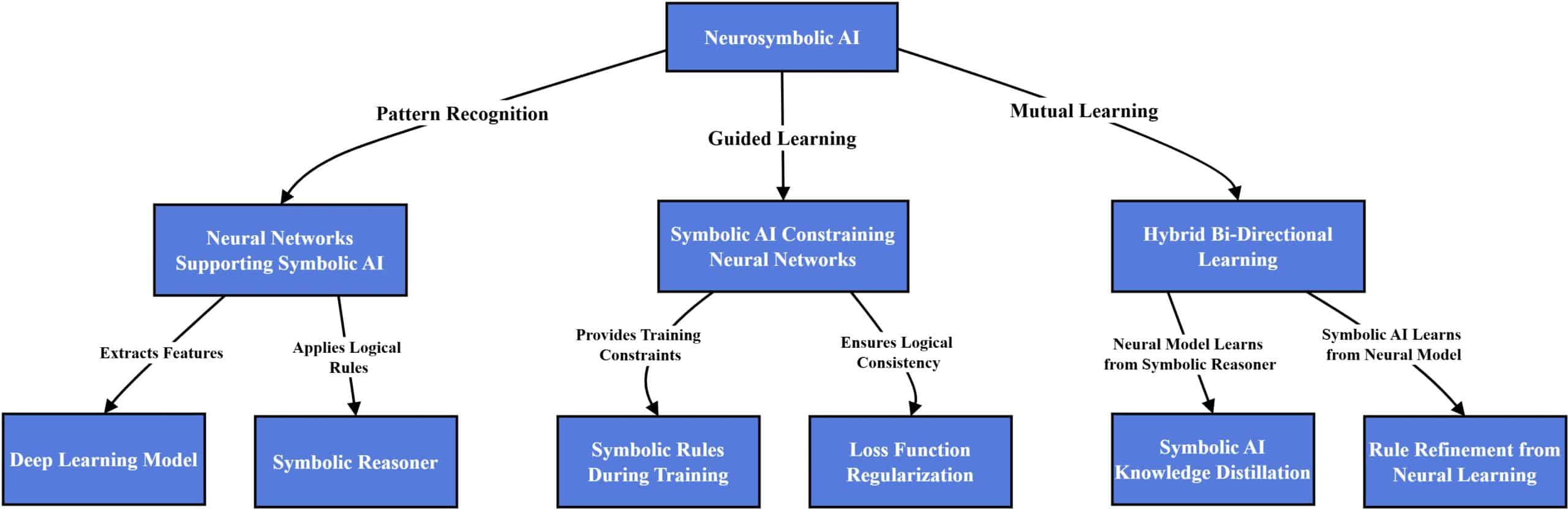

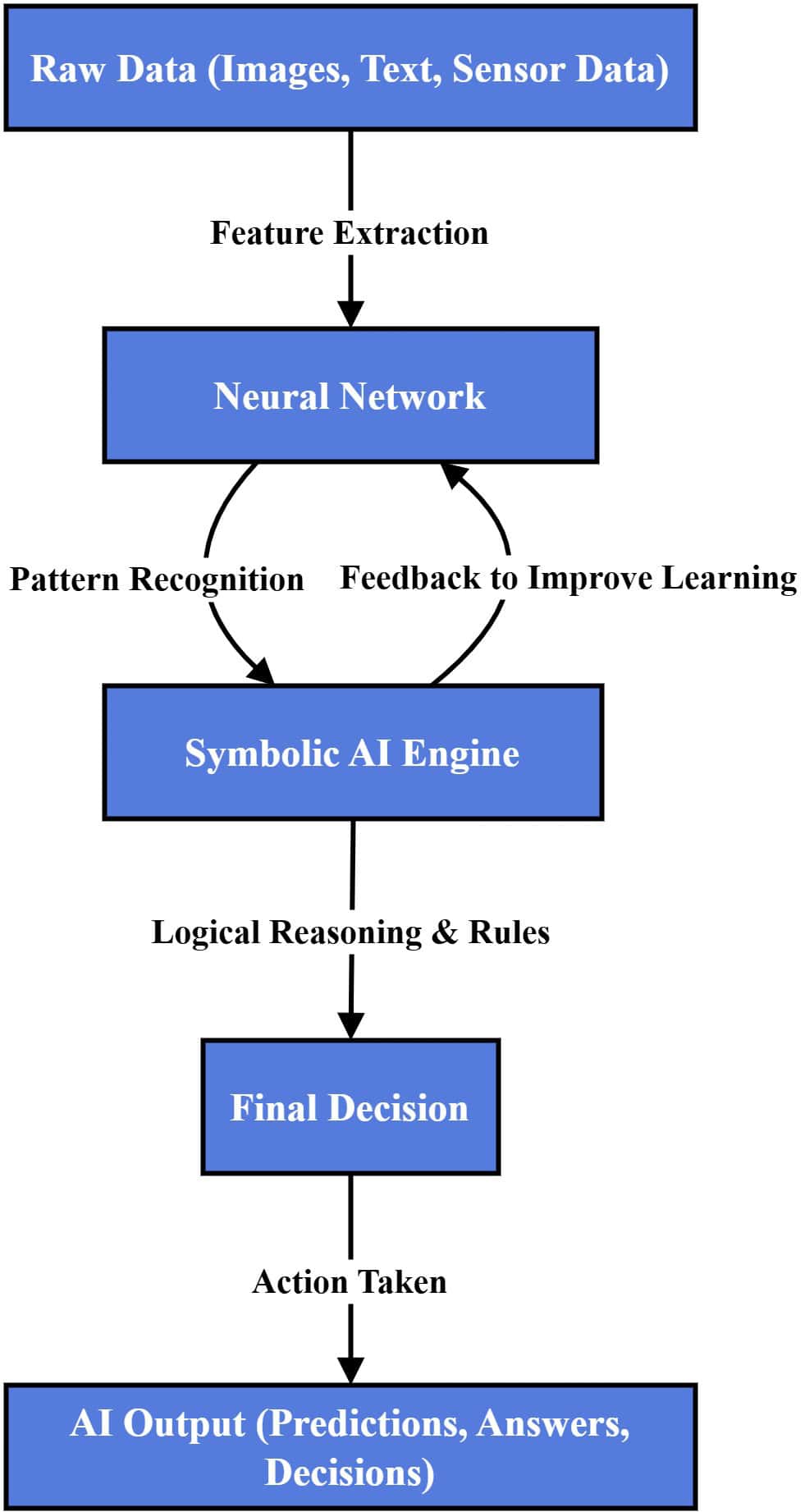

Neurosymbolic AI integrates neural networks with symbolic reasoning using different approaches. Some systems use deep learning to extract patterns from raw data before applying symbolic logic for reasoning, while others use symbolic rules during training to guide and constrain neural learning:

More advanced architectures enable bi-directional interaction, where neural networks learn from symbolic rules, and symbolic AI refines its knowledge based on neural insights. This continuous feedback loop allows both components to evolve dynamically, improving both learning efficiency and reasoning accuracy.

4.1. Neural Networks Supporting Symbolic Reasoning

In this approach, a deep learning model extracts features from unstructured data (images, text, or speech), and a symbolic reasoning system applies logical rules to interpret relationships:

For example, a neural network can detect objects in an image (e.g., returning “dog” and “ball”), and a symbolic reasoner will infer logical relationships (e.g., “If the ball is in front of the dog, the dog might be playing with it”).

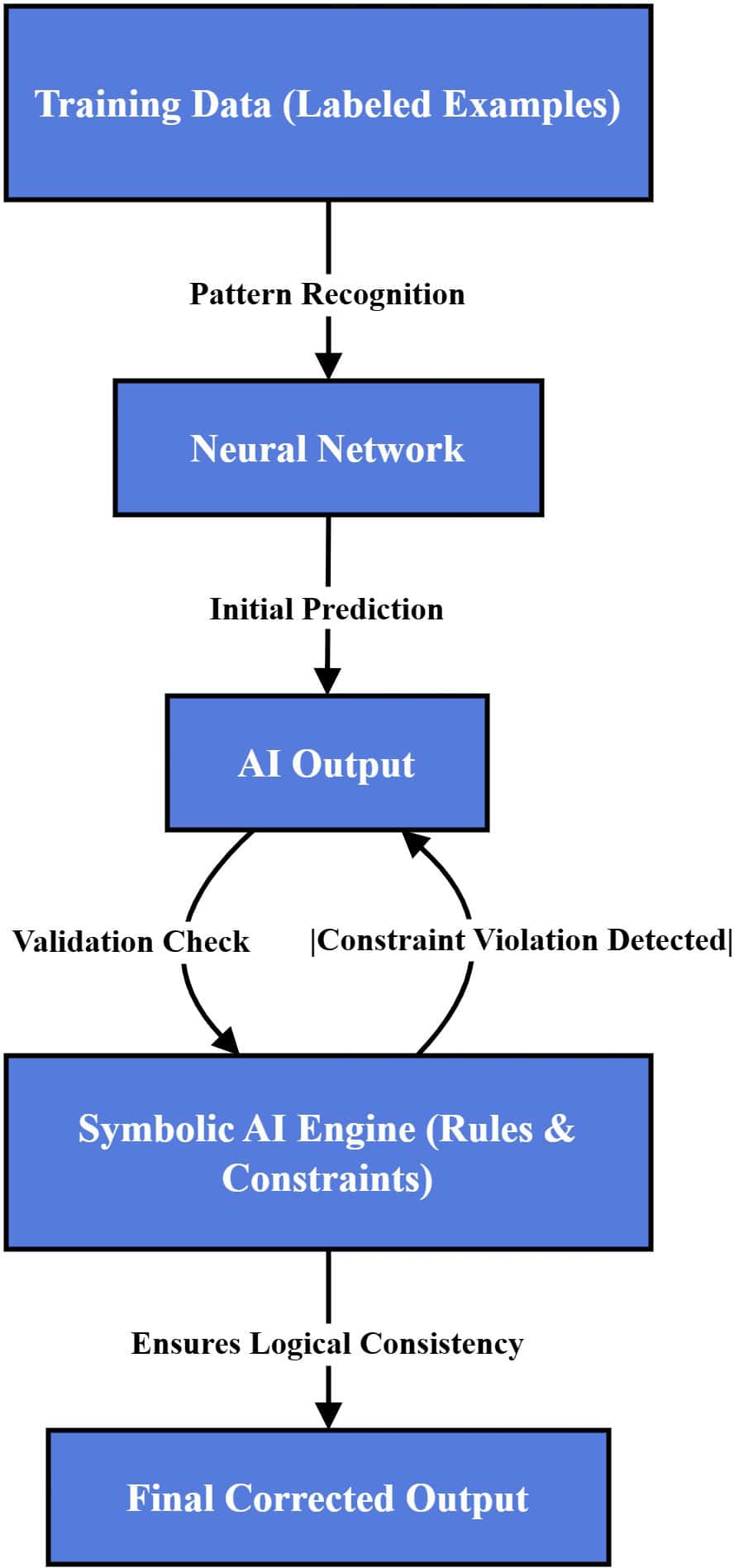

4.2. Symbolic Logic Constraining Neural Networks

This approach applies predefined rules as a framework during training or inference to improve neural network decision-making. Symbolic logic acts as a filter, ensuring that the AI model adheres to predefined constraints:

For example, a deep learning model may detect a condition in medical diagnosis, but symbolic reasoning ensures recommendations align with medical guidelines.

Let’s look at a simple Python example of applying symbolic constraints in training using tensorflow and constraint-based regularization.

import tensorflow as tf

# Define a symbolic constraint: Output probability for "Flu" must be ≥ Probability of "Common Cold"

def medical_constraint(y_pred):

return tf.maximum(0.0, y_pred[:, 0] - y_pred[:, 1]) # Ensure Flu ≥ Common Cold

# Define a custom loss function integrating symbolic rules

def custom_loss(y_true, y_pred):

base_loss = tf.keras.losses.categorical_crossentropy(y_true, y_pred)

constraint_penalty = 0.1 * tf.reduce_mean(medical_constraint(y_pred))

return base_loss + constraint_penalty # Penalize constraint violations

# Apply symbolic constraints in model training

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(32, activation="relu", input_shape=(10,)),

tf.keras.layers.Dense(3, activation="softmax") # Three classes: Flu, Cold, None

])

model.compile(optimizer="adam", loss=custom_loss, metrics=["accuracy"])

Here, we define a symbolic constraint to ensure that the predicted probability of the flu” is greater than or equal to that of the common cold. The custom loss function adds a penalty when this rule is violated. We influence the training process by integrating the rule into the loss function to produce accurate and logically consistent outputs.

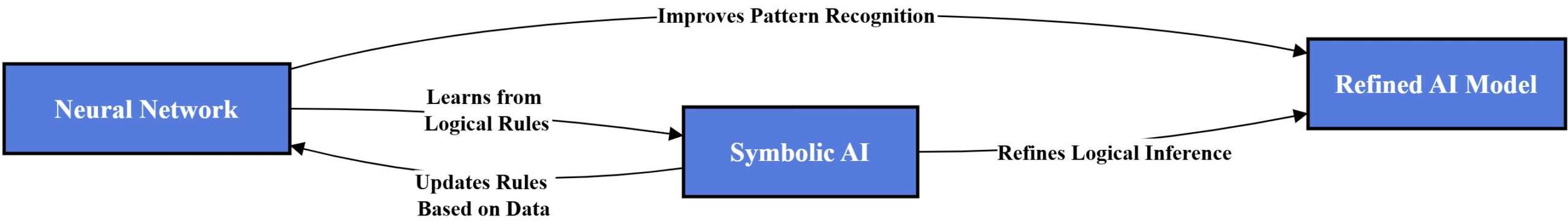

4.3. Hybrid Architectures: Bi-Directional Learning

In a hybrid approach, neural networks learn from symbolic rules, and symbolic inference can also be refined using patterns learned by the neural model.

For example, if the neural network consistently makes correct predictions that aren’t captured by existing rules, those patterns can be used to propose new symbolic rules or adjust existing ones.

These two processes can happen simultaneously in tightly integrated systems, but not always. Some architectures implement only one-way interaction, where either symbolic logic constrains the neural model or the neural model mimics reasoning without updating the symbolic component.

5. Key Models and Architectures

Several AI frameworks have been developed to implement Neurosymbolic AI, each integrating neural learning with symbolic reasoning in different ways:

Model

Description

Key Applications

Neuro-Symbolic Concept Learner (NSCL)

Neural model extracts features and symbolic AI reasons over them.

Visual Question Answering, Object Recognition

Extends Prolog with deep learning for probabilistic reasoning.

Robotics, Automated Decision-Making

Logic Tensor Networks (LTNs)

Merges neural learning with logical constraints.

Knowledge Graphs, AI-assisted Scientific Discovery

NSCL processes an image using deep learning to recognize objects and then applies symbolic reasoning to answer structured questions.

Another well-known model is DeepProbLog, which extends the Prolog programming language by incorporating deep learning capabilities.

LTNs enhance AI’s reasoning ability over structured knowledge, such as relationships in knowledge graphs.

6. Benefits and Challenges

Neurosymbolic AI combines neural networks’ adaptability with symbolic AI’s structured reasoning, creating more intelligent, interpretable, and efficient systems. However, integrating these two paradigms presents scalability, computational cost, and complexity challenges:

Benefits

Challenges

Improved Explainability: Decisions are more transparent, reducing AI’s black-box nature.

Complex Integration: Combining symbolic reasoning with deep learning requires careful design.

Better Abstract Reasoning: AI can go beyond pattern recognition and infer logical relationships.

High Computational Cost: Hybrid models may demand more processing power than purely neural approaches.

Data Efficiency: Requires fewer labeled examples by incorporating symbolic knowledge.

Knowledge Base Maintenance: Symbolic AI components need predefined rules that require expert updates.

Stronger Generalization: Adapts to new situations while maintaining logical consistency.

Interpretability Trade-offs: Hybrid models, while explainable, still add complexity to understanding AI decisions.

Despite these challenges, Neurosymbolic AI offers a powerful approach to making AI systems more transparent, adaptable, and effective across various domains. With ongoing research, many of these limitations are expected to be overcome.

7. The Future of Neurosymbolic AI

Many experts see Neurosymbolic AI as a step toward Artificial General Intelligence. By combining neural learning with logical reasoning, AI can move beyond narrow tasks and generalize across multiple domains, making decisions more dynamically and adapting based on experience. This could enable AI to function more like human intelligence, improving problem-solving and decision-making.

Industries are already integrating Neurosymbolic AI into healthcare, autonomous systems, and natural language processing. Medical AI systems use symbolic knowledge to improve diagnoses, and self-driving technology combines real-time perception with logical decision rules.

As hybrid AI models become more efficient, their adoption is expected to grow. However, evaluating these models requires more than just accuracy: factors such as explainability, logical consistency, and robustness are equally important.

8. Conclusion

In this article, we explored Neurosymbolic AI, a hybrid approach that combines deep learning’s pattern recognition capabilities with symbolic AI’s structured reasoning. By integrating these two paradigms, Neurosymbolic AI creates systems that can learn from data and apply logical reasoning, making them more adaptable and interpretable than traditional AI models.

We examined how neurosymbolic AI works and discussed integration strategies and some models and architectures for implementing it. As more efficient and scalable models are developed, this hybrid approach could become essential to AI-driven applications across various industries.