1. Introduction

In this tutorial, we’ll explain the mathematics and intuition behind the family of Beta distributions in statistics and analyze their shapes.

2. Intuition

Let’s say we flip a fair coin 10 times and bet on tails with our friend. Since heads and tails are equally likely each time, our win probability in each toss is 1/2.

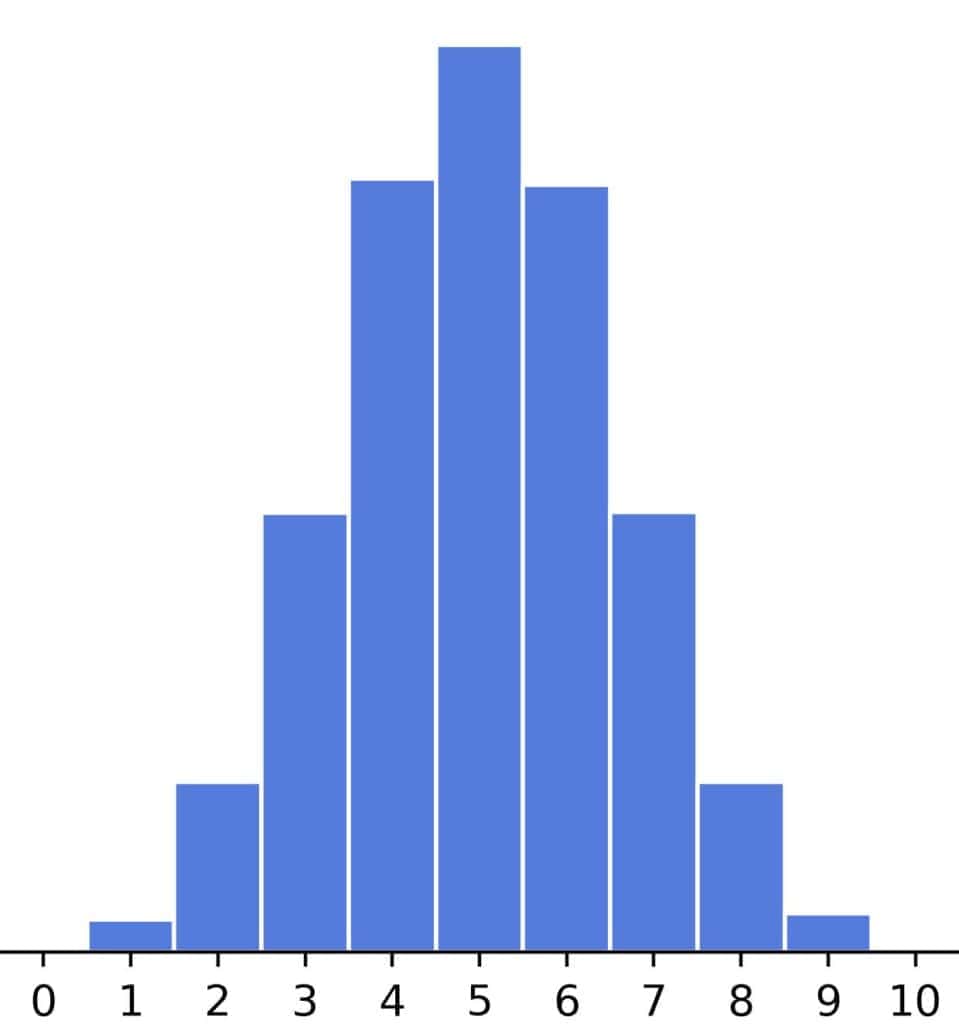

Then, the number of tails in 10 flips follows the binomial distribution centered at (1/2) * 10 = 5:

From there, we can derive how much we can expect to win in this game and decide how much money to bet.

However, what if the question is reversed? We flip a coin 10 times and get eight wins. We may be delighted with the financial gain, but our friend, not so much, so we get accused of using a biased coin.

To resolve the dispute, we must determine the coin’s inherent probability of landing on its tails in a random toss. This is precisely what Beta distributions can do.

The Beta distribution with parameters and

shows how much each

is likely as the success probability, given that there were

successful and

unsuccessful trials.

3. Density

Beta-distributed random variables are defined over [0, 1] and have the following density:

The constant ensures that the cumulative distribution function is 1 for

:

where is the beta function:

Therefore, the density is:

3.1. Why Is There -1?

Essentially, the -1 in the exponents comes from the -1 in the integrand of the Gamma function.

We can try to find some intuition in it using the measure theory.

The CDF of the Beta distribution with parameters and

is:

Let’s move -1 to the denominator:

Now, we have:

As a result, we can transform the CDF to:

where the density doesn’t have -1 in the exponents, and

and

are the numbers of successful and unsuccessful trials.

Intuitively, if we weigh each probability with the logarithm of the corresponding odds ratio, we can use this interpretation of

and

. In more technical terms, the density

is defined with respect to the measure

.

3.2. Non-Integer Parameters

The parameters and

can be non-integers. However, the intuitive explanation was that

and

denote the numbers of successful and unsuccessful trials (or

and

if we use the measure

). How do we interpret a fractional

or

?

Sometimes, the boundary between success and failure is clear-cut. For example, an experiment (a trial) can have several goals. Achieving some while failing at others constitutes partial success. To allow for this nuanced approach to evaluation, we use non-integers and

.

4. Properties

Let’s now check some properties of this distribution family.

4.1. Mean

The mean of a Beta distribution with parameters and

is:

To simplify the expression, we’ll write using the Gamma function

and note that

:

If , the mean is 1/2. If

, the distribution’s center is shifted to the right and to the left if

.

This has an intuitive explanation. If there are many successful outcomes, it makes sense to believe that the probability of success is higher and vice versa.

4.2. Variance

We can similarly compute the variance:

The larger and

, the smaller the variance. That is also intuitive. The more experiments we conduct, the more we know about the success probability, so the distribution we use as its model should be less variable.

4.3. Skewness

The skewness of a distribution quantifies its deviation from symmetry. In the case of a beta distribution with shape parameters and

, the skewness is:

So, for , the distribution is symmetric, right-skewed for

, and left-skewed for

.

This also has an intuitive explanation. If the number of successes equals the number of unsuccessful trials, there are no grounds to believe the true success probability is more likely to be > 1/2 than < 1/2, and vice versa. A symmetric distribution fits this assertion.

By the same logic, if , successful trials are a majority, so it’s reasonable to believe that the true success probability is > 1/2. The right model for this assertion is a distribution centered around a value > 1/2. However, the remaining tail stretching to 0 makes the distribution left-skewed. The converse holds for

.

4.4. Kurtosis

The formula for the excess kurtosis is a bit more complex:

Negative values indicate tails lighter than those of the normal distribution, and positive values indicate heavier tails. The exact effect on the shape depends on the values of other moments (that are, in turn, defined by and

).

4.5. Mode

The mode of a distribution is its most likely value, i.e., the value with the highest density.

So, to compute it, we need to find that maximizes the density

. Setting the first derivative of

to zero and solving for

, we get that the mode is:

For a symmetric distribution, , and the mode is equal to the mean:

5. Shapes

Depending on the values of and

, the Beta density can take many shapes.

5.1. Symmetric Shapes

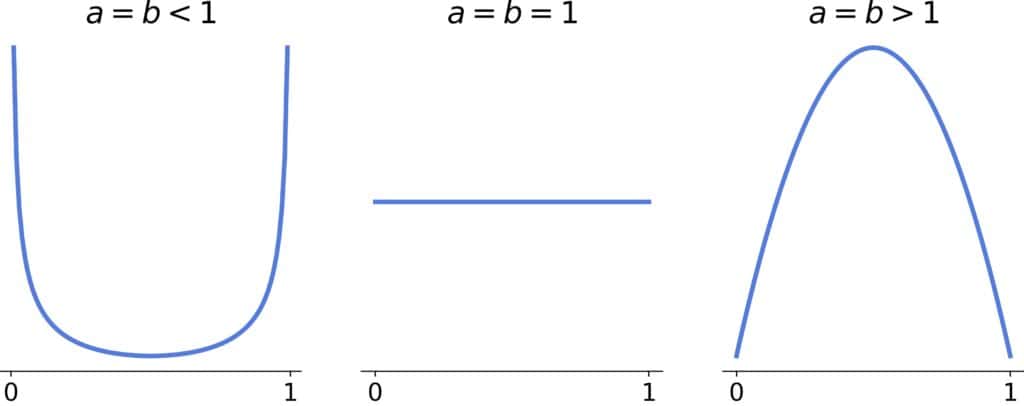

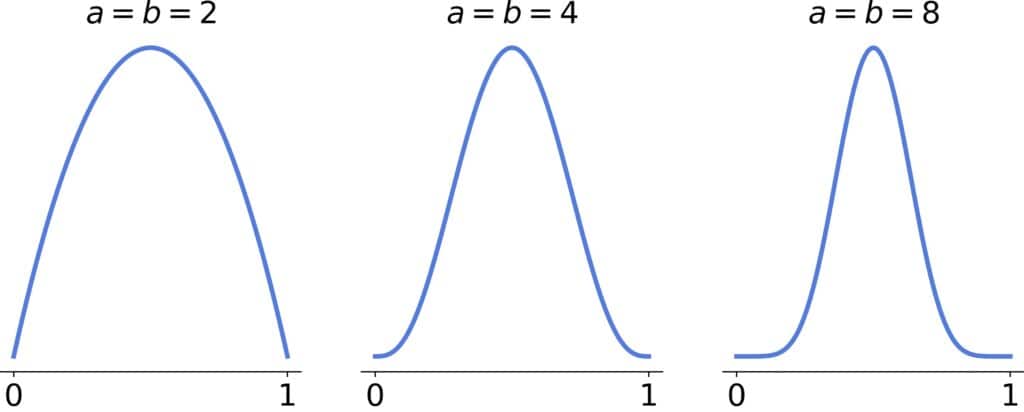

Symmetric shapes have , and we differentiate between three cases:

The special case corresponds to the uniform distribution.

If , the distribution is U-shaped, and if

, it’s bell-shaped and approaches the normal distribution as

and

increase:

There will be two inflection points if .

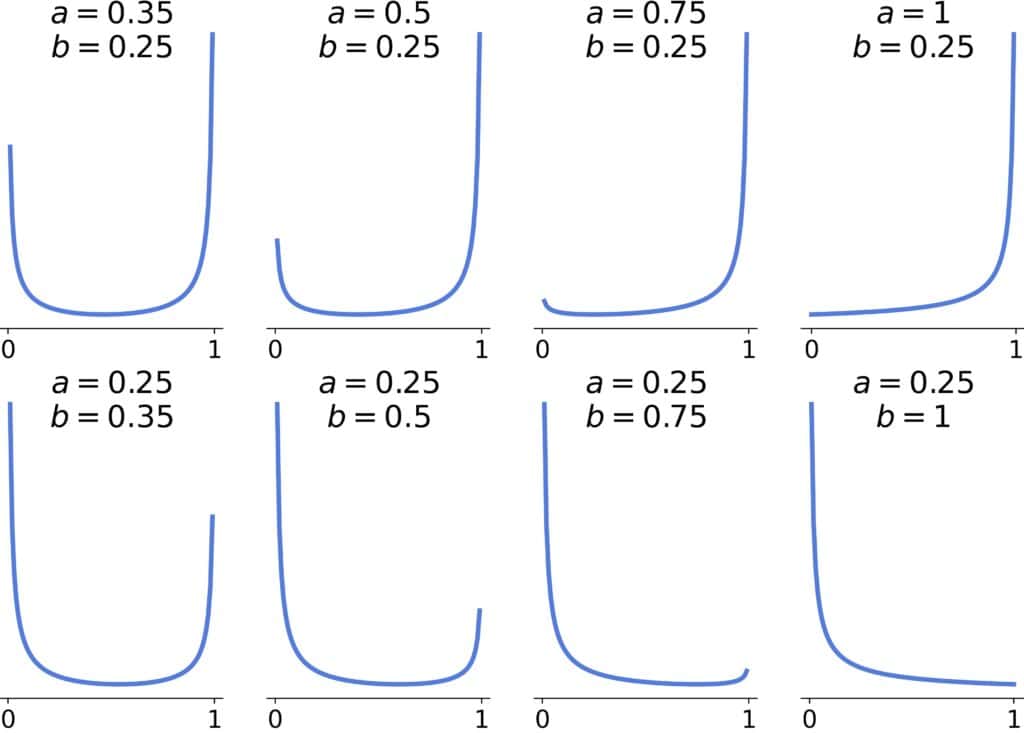

5.2. Asymmetric Shapes

For asymmetric shapes, corresponds to right-skewed, and

to left-skewed distributions.

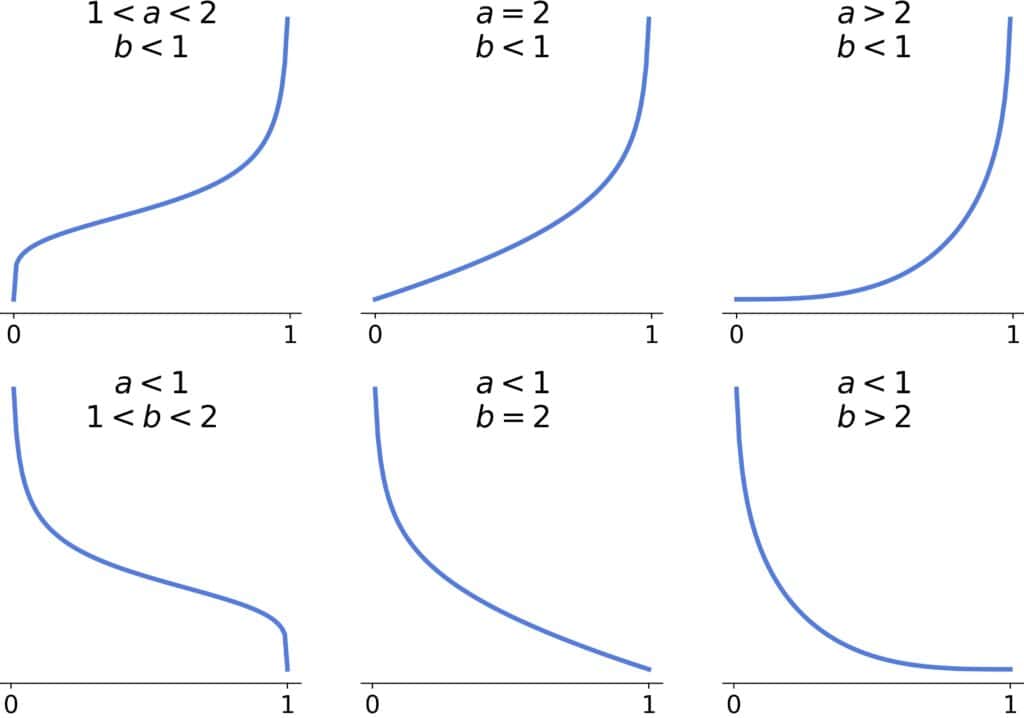

If both , the distribution will be convex, approaching an L-shape (reversed or not) as the larger parameter approaches 1:

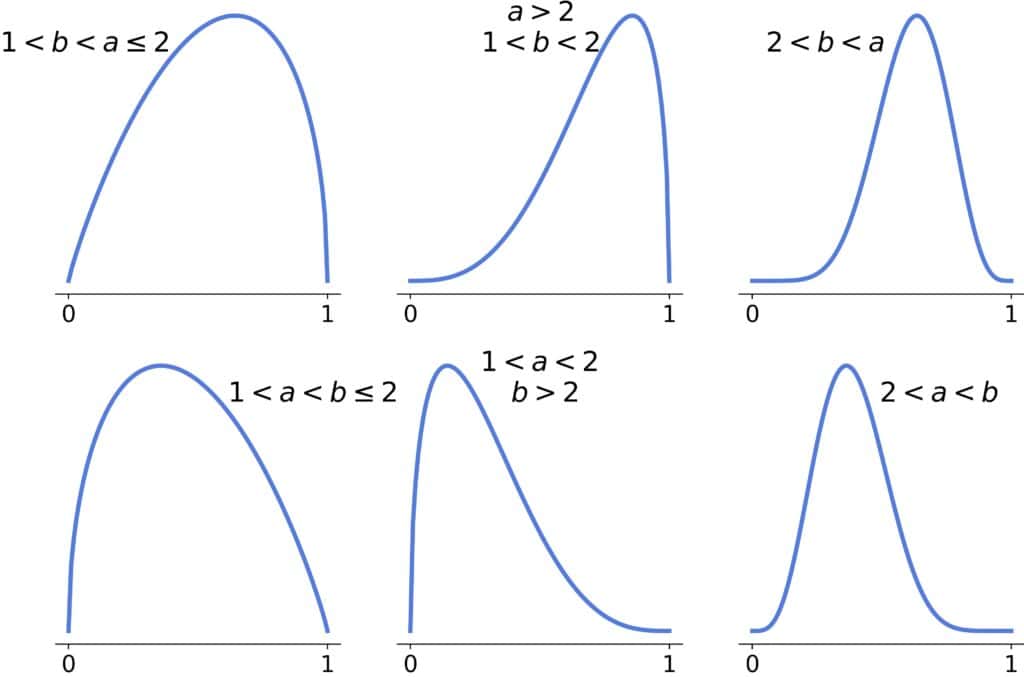

If , the distribution will be unimodal, and the tail heaviness will decrease as the parameters’ difference grows. There will be one inflection point if one parameter is >2 and two inflection points if both are >2:

If and

or if

and

, the shape will be convex or with one inflection point:

The inflection point will be there if .

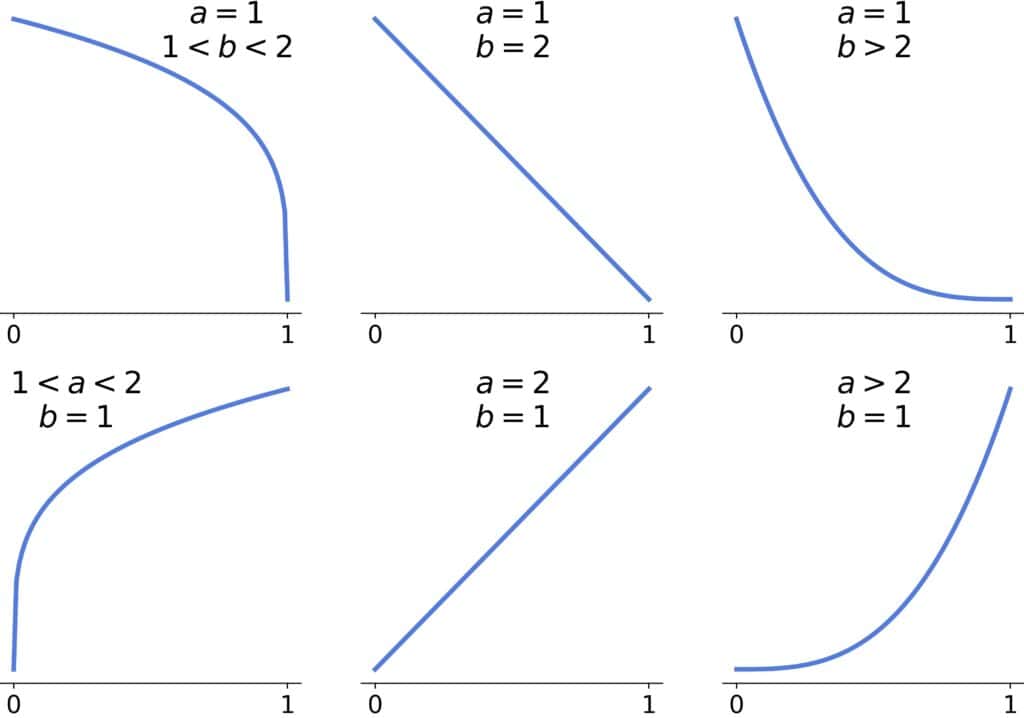

The last remaining cases are and

:

We have a straight line if the larger parameter equals two, a concave curve if it’s <2, and a convex one if it’s >2.

6. Conclusion

In this article, we discussed the family of Beta distributions in statistics. These distributions are defined over [0, 1] and can take many shapes, making them suitable for modeling normalized quantities (such as probabilities).