1. Introduction

As technology advances, traditional computing methods rooted in von Neumann’s architecture face challenges. Neuromorphic computing, however, offers solutions to some limitations by borrowing from the architecture of the human brain.

In this tutorial, we’ll discuss the fundamentals of neuromorphic computing, explaining how it works and its importance. We’ll also provide a practical use case to illustrate its application.

2. Neurons and Synapses: How the Brain Transmits Signals

The human brain comprises billions of neurons, which communicate by transmitting electrical signals known as spikes. Each neuron is connected to others through synapses.

A synapse activates and transmits a spike from the sending neuron to the receiving one when the incoming spikes exceed a specific threshold.

The brain computes information through patterns of these spikes that occur in parallel. In this way, the brain can perform multiple tasks simultaneously.

3. Neuromorphic Computing vs. Von Neumann Architecture

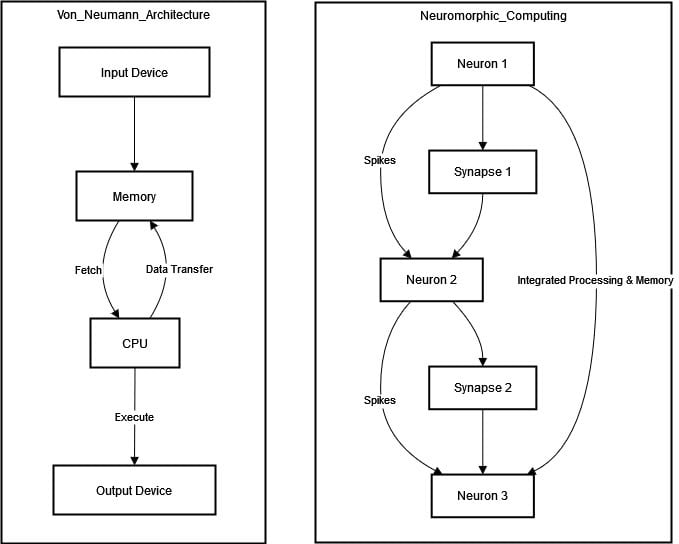

Neuromorphic computing designs hardware and software systems that operate similarly to the neurobiological structures of the human brain. These systems differ from classic Von Neumann architectures:

In neuromorphic computing, neurons (corresponding to the processing units) and synapses (corresponding to the memory units) are integrated into the same units. Each neuron can store and process information simultaneously.

On the other hand, the Von Neumann architecture separates memory and processing. Instructions and data are fetched from a memory unit, processed in the CPU, and sent to the output device.

A key limitation of the Von Neumann Architecture is the Von Neumann Bottleneck. Because the instruction and data streams share the memory, the CPU can only fetch a piece of data or an instruction at a time. This limits how fast the entire system can work. The CPU has to wait for data to be transferred back and forth between memory and the processor.

4. Core Concepts in Neuromorphic Computing

Let’s consider the main differences between neuromorphic computing and traditional computing systems:

- Event-driven processing

- Spiking neural networks

- Energy efficiency

- Parallelism and adaptability

4.1. Event-Driven Processing

One of the differences between a neuromorphic system and traditional computers lies in how processing tasks are executed. Traditional computing uses clock cycles, which means tasks are performed one after another in time intervals of constant duration.

In contrast, neuromorphic systems use event-driven processing, which means they perform computations only when an event occurs.

This asynchronous mode of operation is more akin to how a human brain functions, making it better suited for data processing.

4.2. Spiking Neural Networks

In spiking neural networks, neurons communicate via discrete electrical spikes instead of continuous signals.

Let’s consider the ReLU (Rectified Linear Unit) activation function:

If the input is positive, ReLU produces an output equal to the input, allowing the neuron to activate and pass signals forward.

However, if the input is zero or negative, the output is 0, leaving the neuron inactive. In such cases, the neuron doesn’t contribute to the network’s overall output, effectively blocking any further propagation of signals.

Spiking neurons function on a different principle. Instead of generating output instantaneously from the current input, they accumulate inputs over time. When the membrane potential hits a specific threshold, the neuron “fires” a spike, sending a signal. This mechanism doesn’t just consider the input but also the timing and history of prior inputs.

4.3. Energy Efficiency

One of the most important advantages of neuromorphic computing is its energy efficiency.

Most modern AI systems are power-intensive. They usually run on tens of thousands of servers in massive data centers. In comparison, the human brain uses around 20 watts. Despite this low power, it handles the challenging tasks of vision recognition, decision-making, and motor control.

Neuromorphic computing aims to replicate this efficiency.

Neuromorphic systems can handle complex tasks using less power than traditional hardware, which constantly consumes energy. This is important for applications in any setting where power consumption is a constraint, including edge computing, wearable devices, and autonomous systems.

4.4. Parallelism and Adaptability

Another feature of neuromorphic computing is inspired by the brain’s ability to handle multiple tasks in parallel. Neuromorphic systems inherently support massive parallelism and can offer greater potential for processing complex, data-intensive operations.

Furthermore, neuromorphic systems are adaptable. Biological brains can change the connections between neurons. Such changes occur through a process called synaptic plasticity. Neuromorphic chips replicate this, using synapses whose strengths can be adjusted in response to learning experiences.

4.5. Core Concepts

The table below illustrates the key differences between neuromorphic and traditional computing architectures.

Core Concept

Neuromorphic Computing

Traditional Computing

Spiking Neural Networks

Uses discrete electrical pulses or “spikes” to transmit information.

Artificial neural networks use continuous activation functions

Event-Driven Processing

Computations are performed only in response to significant events (e.g., spikes)

It relies on clock cycles

Energy Efficiency

Consumes less power

Power-intensive

Parallelism and Adaptability

Supports massive parallelism and adapts by adjusting synaptic strengths in response to learning

Operates sequentially or with limited parallelism; adaptation is less dynamic.

It provides insight into why neuromorphic architectures are preferred for energy-efficient, adaptive tasks that require real-time responsiveness.

5. Some Hardware Implementations

Several companies and research institutions are developing dedicated hardware ideal for neuromorphic computing.

5.1. IBM TrueNorth

One of the oldest and most famous neuromorphic processors is IBM’s TrueNorth chip. It has over one million artificial neurons and hundreds of millions of synapses.

These chips are designed to execute tasks such as pattern recognition and sensor processing with a tiny fraction of the energy regular processors require.

TrueNorth runs in an event-driven mode and spike-based communication paradigm.

5.2. Intel Loihi

Another popular example of neuromorphic hardware is Intel’s Loihi chip. It uses advanced features such as on-chip learning and can adjust its synaptic weights in real time.

Loihi is well-suited for applications that require continuous adaptation, such as real-time optimization, robotic control, and sensor fusion.

5.3. BrainScaleS

BrainScaleS is a neuromorphic platform developed at Heidelberg University as part of the European Human Brain Project.

BrainScaleS is designed to replicate the spiking neurons’ operations via analog computations. This allows it to run up to 10,000 times faster than biological neurons.

Spiking neural networks communicate through discrete spikes. However, internal computations in neuromorphic systems usually use analog computations to accumulate inputs. This approach mimics the continuous processes observed in biological neurons to determine when a spike should occur.

6. Practical Use Case: Real-Time Seizure Detection

Let’s consider an example in which we monitor patients with epilepsy in real time to identify seizures and act before injury or complications can occur.

Traditional systems are limited by ordinary processors, which struggle with large volumes of data from neural signals. Additionally, they might require significant energy and exhibit delayed responses.

6.1. Neuromorphic Computing Solution

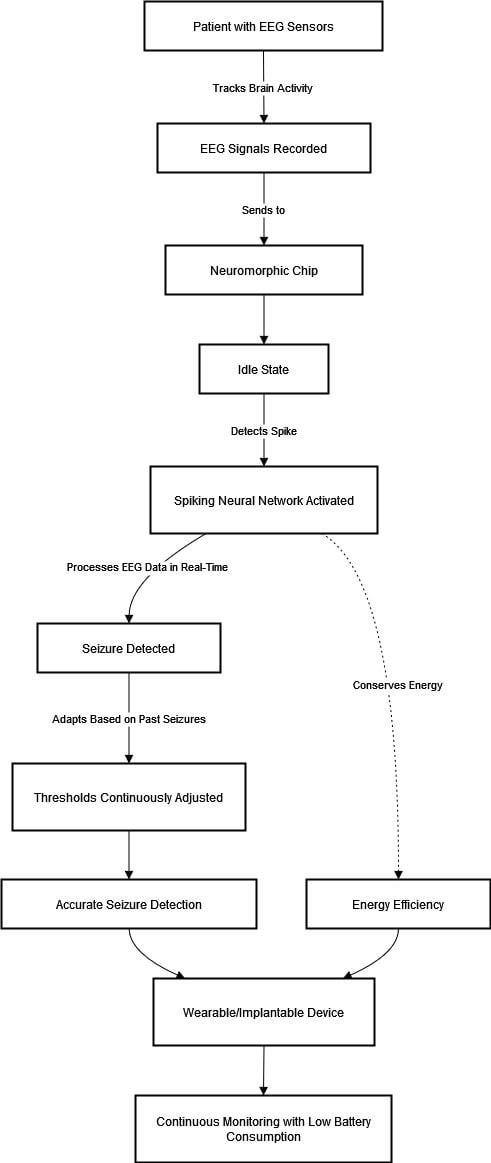

In a neuromorphic system, electroencephalography(EEG) sensors continuously track the patient’s brain activity and record neural signals. These recorded signals are then relayed to neuromorphic chips like IBM’s TrueNorth or Intel’s Loihi for processing.

Upon receiving a signal, the system doesn’t process every bit of data because this would be energy-consuming and inefficient. Rather, it stays idle until it detects a significant spike that could indicate seizure activity.

When such a spike occurs, the embedded spiking neural network in the chip activates. It processes relevant EEG data in real time to respond to critical events while conserving energy. The system can learn from past seizure patterns, continuously adjusting its detection thresholds.

This adaptability ensures that the chip becomes increasingly accurate at identifying seizure onsets. Additionally, it can personalize its responses based on the patient’s evolving neural activity.

As the system executes these tasks, it mimics the brain’s energy-efficient strategies by only using power when needed. As a result, our monitoring devices can run continuously with minimal battery consumption. Wearable or implantable devices allow continuous patient monitoring without frequent battery changes:

So, when the neuromorphic chip receives these signals, it processes them upon detecting a spike. This approach enables seizure detection and allows energy-efficient and continuous patient monitoring.

7. Conclusion

In this article, we discussed how neuromorphic computing emulates the human brain.

Traditional computing systems split up computational tasks into separate memory and processing functions. In contrast, neuromorphic computing combines memory and processing in the same unit.

Neuromorphic computing has the potential for far-reaching applications in healthcare, robotics, and edge computing.