1. Introduction

Language models are a fundamental and core component of natural language processing (NLP). They enable machines to understand, generate, and manipulate human language effectively. These models, whether based on statistical approaches or advanced machine learning techniques, are designed to predict the likelihood of word sequences or generate coherent text.

In this tutorial, we’ll explore the basics, types, and applications of language models and explain their significance in NLP.

2. What’s a Language Model?

In one sentence, a language model is a tool that assigns probabilities to word sequences. We use language models in various NLP tasks, including text generation, machine translation, speech recognition, and sentiment analysis.

For example, ChatGPT is an advanced AI tool based on a large language model (LLM). It analyzes large amounts of text data to understand language and context. We can use ChatGPT to answer questions, write content, explain ideas, have conversations, and more.

Language models are built by analyzing large textual datasets to understand grammar, syntax, semantics, and contextual relationships between words. Their main objectives are understanding the context and predicting future words or phrases.

2.1. Types of Language Models

We can categorize language models into various types based on their structure and approach. Overall, there are two separate groups of language models:

- Statistical language models (SLMs)

- Neural language models (NLMs)

3. Statistical Language Models

Statistical language models estimate the probability of word sequences using mathematical formulas relying on traditional probabilistic methods. Common approaches include:

- N-grams

- Exponential models

- Skip-gram models

3.1. N-grams

An n-gram is a sequence of adjacent words or letters from a particular text source.

N-grams compute the probability of a word based on the preceding () words. Unigrams (

), bigrams (

), trigrams (

), and higher-order N-grams are all based on this concept.

For example, a trigram model predicts a word based on the two preceding words, while a unigram treats each word as independent of its context. N-grams remain a fundamental statistical tool for many language modeling tasks.

3.2. Exponential Models

Exponential models, such as maximum-entropy models, enhance traditional N-gram approaches by incorporating features beyond simple frequency counts.

In NLP, maximum-entropy models use features such as part-of-speech (POS) tags, specific words, and patterns to predict the next word. Besides maximum entropy models, exponential models include logistic regression and conditional random fields, which also use exponential functions to model relationships.

By weighting these features, exponential models refine predictions and account for complex linguistic structures. The weights in maximum entropy models are found by optimizing a likelihood function to minimize the error between the model’s predictions and the observed data.

3.3. Skip-Gram Models

Skip-gram models generalize N-grams by allowing for gaps in the word sequence, capturing non-consecutive dependencies between words.

Finding those dependencies makes skip-grams particularly useful for understanding broader contextual relationships. For example, a 2-skip-n-gram skips every second word in a text to form a sequence of N words. We can use skip-grams as features in classification models or as methods in language modeling to decrease perplexity.

4. Neural Language Models

Neural language models use neural networks to learn language patterns. They’ve gained popularity due to their ability to handle complex relationships between words and their capacity for self-learning from data.

Common approaches include recurrent neural networks (RNNs), long short-term memory networks (LSTMs), gated recurrent units (GRUs), and, more recently, transformer-based models.

4.1. Recurrent Neural Networks

Recurrent neural networks (RNNs) are designed to process sequential data via loops within the network to maintain a memory of previous inputs.

They’re a good choice for tasks where context matters, such as language modeling and sequence prediction. However, traditional RNNs struggle with long-range dependencies due to issues such as vanishing gradients.

4.2. Long Short-Term Memory Networks and Gated Recurrent Units

Long short-term memory networks (LSTMs) and gated recurrent units (GRUs) were developed to solve issues and improve standard RNNs.

They use special gating mechanisms to selectively retain and forget information, which allows them to capture long-term dependencies. We can use LSTMs and GRUs for text generation, speech recognition, sentiment analysis, and similar tasks.

4.3. Transformers

Transformers have revolutionized NLP by introducing attention, which allows models to dynamically weigh the importance of different words in a sequence.

Models like BERT (bidirectional encoder representations from transformers) and GPT (generative pre-trained transformer) have set new benchmarks in tasks such as question answering, text summarization, and conversational AI.

5. Large Language Models (LLMs)

Although large language models (LLMs) are a type of neural language model, their importance and impact on NLP deserve a separate section. LLMs are massive neural networks trained on vast amounts of text data. As a result, they can generate highly coherent and contextually relevant text in various applications.

LLMs differ from traditional neural language models in their scale, training data, and capabilities. They often contain billions (or even trillions) of parameters and are pre-trained on extensive datasets covering diverse topics and languages. This scale enables them to perform exceptionally well on language understanding and generation tasks.

Another feature of LLMs is their ability to generalize across tasks, often requiring minimal fine-tuning or additional training. They achieve this through few-shot, one-shot, and zero-shot learning, where the model adapts to new tasks with little or no labeled data.

5.1. Popular Large Language Models

Some of the most well-known LLMs are:

LLM name

Developed by

Introduced

Features

GPT

OpenAI

2022

The GPT family (e.g., GPT-3 and GPT-4) has set benchmarks in text generation, creative writing, and conversational AI. These models use the transformer architecture and rely on pre-training followed by task-specific fine-tuning or prompt engineering.

BERT

2018

BERT is designed to understand the context of words in a bidirectional manner. It excels at tasks such as question answering and sentence classification.

T5

2019

T5 treats every NLP task as a text-to-text problem. This approach allows T5 to excel in tasks ranging from translation to summarization.

Llama

Meta

2023

Llama models are optimized for efficiency and are designed to democratize access to LLM research.

Claude

Anthropic

2023

Claude emphasizes safety and alignment, making it a reliable option for generating text while following ethical guidelines.

Gemini

2023

Gemini is an advanced LLM designed to combine language understanding with reinforcement learning. It excels in tasks that require reasoning and contextual understanding and performs strongly in language generation and problem-solving.

5.2. Ethical Dilemmas and Regulations Surrounding LLMs

While LLMs have high potential, they also present significant ethical and regulatory challenges. LLMs can unintentionally reinforce social biases and propagate misinformation. Intellectual property issues also arise, as using copyrighted materials in training data requires clear guidelines with creators’ rights and approval.

Global regulations are emerging to address these challenges. Initiatives like the EU AI Act and the US AI Bill of Rights aim to ensure fairness and transparency in AI systems. Transparency in model development and deployment is critical for building trust and minimizing risks in many applications based on LLMs.

6. Applications of Language Models

Language models, especially LLMs, have a broad spectrum of applications across industries. One of the most prominent uses is text generation, where these models produce coherent and context-aware content. This application includes creative writing, generating summaries, drafting emails, producing automated reports, etc.

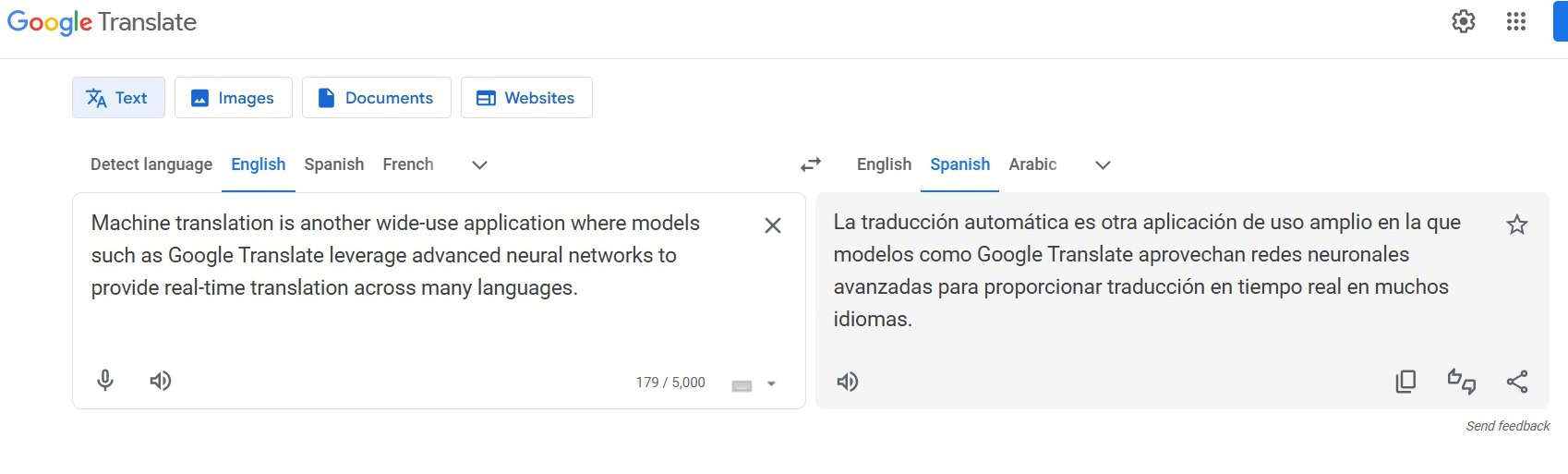

Machine translation is another wide-use application where models such as Google Translate leverage advanced neural networks to provide real-time translation across many languages. For instance:

Similarly, conversational AI and chatbots utilize LLMs to provide human-like interactions, making customer support, personal assistance, and educational tools more efficient and engaging.

Many businesses use language models to analyze sentiment in customer reviews, social media posts, and feedback. These insights help understand public opinion, improve products, and craft targeted marketing strategies. Additionally, language models help identify inappropriate, harmful, or spammy content on social media platforms and other online forums, ensuring safer digital environments.

Also, developers use tools based on language models for code suggestions, autocompletion, and debugging. These tools reduce development time and improve code quality by providing context-aware assistance. Lastly, LLMs refine search engines by improving contextual understanding and enabling more intuitive query processing.

7. Conclusion

In this article, we explained language models.

Language models enable machines to understand, generate, and manipulate human language. From statistical methods to advanced neural approaches, they’ve become a part of many daily applications. As research continues, language models will likely become even more powerful, shaping the future of human-computer interaction.