1. Introduction

We often use the term orthogonalization when discussing Machine Learning (ML) topics. However, this concept came from linear algebra long before any ML application.

In this tutorial, we’ll discuss what it means from a theoretical perspective and why it’s so important in ML.

2. What Is Orthogonalization?

We start by defining orthogonal vectors. Then, we apply the abstraction we build to train a neural network.

2.1. Orthogonalization in Linear Algebra

Let’s suppose we have vectors . The orthogonalization process will give us vectors

such that

(1)

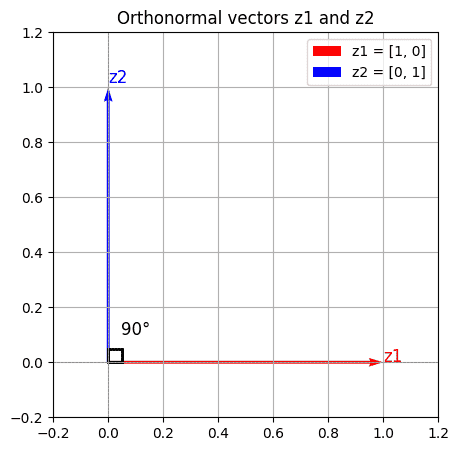

Each vector of the set should be orthogonal to the others for the set to be considered an orthogonal set. This means that they are 90° from each other; therefore, their dot product is zero.

Additionally, we define that if the vectors are unit-length in addition to being orthogonal, they are called orthonormal.

We can put all of this together mathematically:

(2)

If that is the case, we can also say that forms an orthonormal basis of the span of the original vectors

.

And as example we draw the vectors and

, which are orthonormal:

But how can a concept that looks so simple be so relevant in ML?

2.2. Orthogonal Features

The main idea and abstraction we should bring from the linear algebra orthogonalization is independence.

This means that two orthogonal vectors will control independent things. In our example, is defined along the horizontal direction while

is defined along the y-axis or vertical direction.

So when we do a PCA, for example, we have orthogonal principal components (PCs). This ensures that they are uncorrelated. So, we retain as much variance as possible from the original dataset while reducing dimensionality. This is only possible because we have components capturing the variability in different directions without redundancy. We considered a specific application of orthogonalization in feature engineering, now let’s go to a broader view in ML.

3. Orthogonalization in Machine Learning

Let’s imagine a full machine-learning workflow. We have a dataset, that we split into training, validation, and test. We design an architecture and we set hyperparameters. Our final goal is to have a model that performs sufficiently well in the three subsets as well as in real-world data.

But what if we change something to improve the performance in the training set and this negatively affects the performance in the test and the validation sets?

For this reason, we should think about the performance in each dataset as orthogonal to each other. Based on the problem at hand, we choose one or another approach to fix it.

3.1. Improving the Performance

First, we consider that the performance in the training set is not good enough. We can adjust the complexity of the neural network by adding some layers or changing the optimization algorithm.

However, if our model does not perform well on the validation set, we should consider adding some regularization. Alternatively, we can use a larger training set if it’s possible.

But what if we can’t perform well on the test set? We’ll likely need a bigger validation set. If we’re doing well on the validation set but not on the test set, we probably have an overfit.

Finally, if we don’t have good results in the real-world data, what can we do? We should take a second look at the problem formulation. It might even be necessary to change the cost function or define a different validation set.

As we can see, there are different things we can try depending on our problem. If we go back to our orthogonal concept, we must think of each of these solutions as independent from each other, as if we were tuning the different to improve our model’s performance in the subspace of performances.

4. Conclusion

In this article, we presented how the concept of orthogonalization can be used in Machine Learning. In this way, we ensure that we are tuning one aspect of our workflow at a time aiming to have the desired performance in the problem at hand.

We should highlight and keep in mind that the adjustments must be done carefully and one at a time. By following the guidelines provided here, we can tackle each situation independently. So we don’t harm the performance in one subset to improve the performance in another subset.